Artificial intelligence (AI) will soon exert unprecedented influence over human beliefs. Not by understanding human psychology or personalising to individuals, but by generating massive volumes of factual-sounding claims. Perfect accuracy is not needed. The appearance of substantive information suffices.

LLMs are the perfect tools for political persuasion. A brief conversation with an AI chatbot can shift voter preferences more effectively than professional campaign videos - at low cost, in any language, 24/7. This isn't speculative.

Recent experiments spanning the 2024 US presidential race and the 2025 Canadian and Polish elections show that short AI conversations advocating for a top candidate produced significant shifts in preferences. These effects exceeded typical survey-based video ad effects. Furthermore, conversational AI can shift attitudes across a broad policy space beyond any single election.

Persuasion as an advantage

In an interactive setting (dialogue) the model may dynamically adapt to the user and the stated priorities in real time, maintaining coherence.

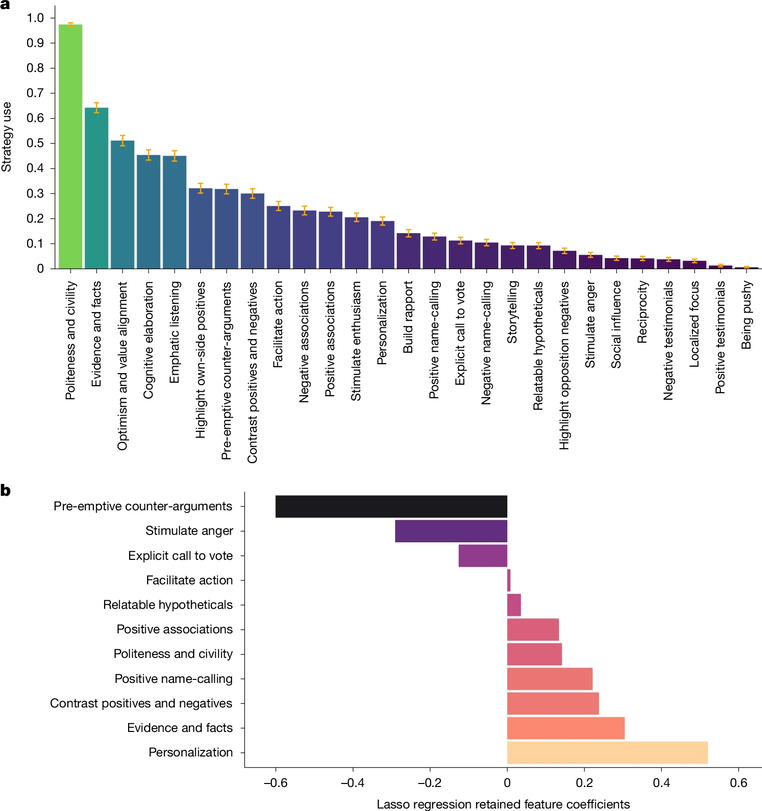

The evidence mechanism matters. When AI is instructed to avoid factual claims, persuasiveness effects drops. Effective AI persuasion uses logical argumentation, evidence-based messages, not emotional manipulation or pure propaganda without substance. Micro-targeting (i.e. personalisation) in practice showed limited effects. Non-personalized and non-specific strategies need not be less effective, since the power given by AI may lie elsewhere.

Information density is the secret. It explains 44% of persuasive impac. Net result? Persuasion scales with the volume of plausible (plausible-sounding, at least) claims, even when their accuracy declines. Loads of information impact on persuasion but it suffices for the information to be plausible-sounding, not necessarily factual. Let that sink in.

In case of the US, the AI-persuasion instrument could persuade pro-Trump or pro-Harris to change minds, or unaligned voters to become more aligned. Similar effects were seen in Canadian and Polish elections (2025). Pro-Trump and pro-Harris votes could be made even more 'pro', too.

The always-on campaign

These effects become more concerning when deployed in automated systems. Now consider a scenario when a local candidate in a tight political race deploys an AI-based system that engages voters on social media about housing policy. The AI exchanges a few messages, learns the voter prioritises schools over transit, and so transforms its housing argument accordingly towards voter preference, and generates 10 factual claims that sound authoritative, are polite, and optimistic. In effect, the voter's support shifts significantly. And what if I tell you that an entire operation may run on a laptop, leaves no clear attribution trail, and be cheap?

There are two ways to design such instruments: fine-tuning, or proper prompting. I advise against fine-tuning. While frontier-models are best when it comes to quality, professional AI-based information operations may opt for using open-weight models as end-to-end, architecture pipelines. Persona design appears to matter more than model choice.

Furthermore, when automated agents are required to make a counter-argument, something extraordinary happens: such AI agents can behave as more convincing, advocating for their points, and also being even more focused on their ideological design. This moves influence from isolated persuasion moments to durable, multi-turn identities that can persist across platforms and election cycles.

The risk calculus is changing

This isn't merely about better campaign tools. The combination of low cost, automated operation, and persona consistency means influence campaigns can now run continuously, target microsegments of societies, adapt messaging in real-time - capabilities previously limited to well-funded state actors. The barrier between retail politics and information warfare has narrowed, or is disappearing quickly.

I find this excerpt from the paper very interesting:

Conversely, the more the AI model attempted to pre-emptively address potential objections, the less persuasion occurred—although this association may be explained, in part, by the AI model being more likely to pre-empt in conversations where the participant previously raised objections (and thus was less likely to change their minds at baseline). One particularly interesting lack of predictive power was for claim accuracy, which was excluded by the model, indicating there were no substantial persuasive gains associated with attempting to mislead.

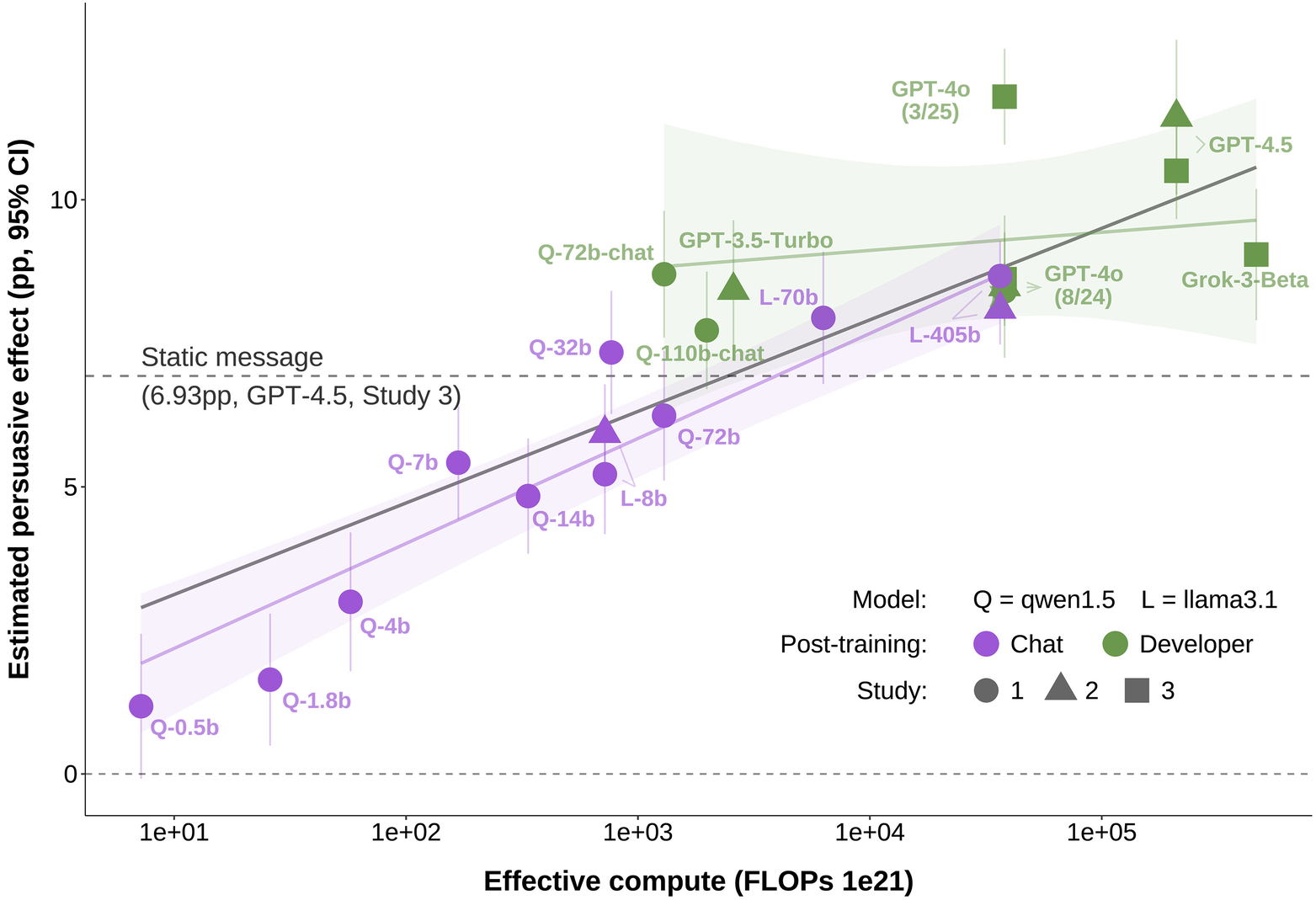

My research on AI propaganda factories demonstrates how to turn such effects into an always-on pipeline. We now know that persuasiveness of LLM models appear to scale logarithmically in function of the model size:

However, in terms of operational use, my research indicates that in practice, models served by third-party servers (i.e. Cloud like OpenAI, Anthropic, or other providers) have significant disadvantage as it may be too easy to detect or disrupt such a use. For this reason it's reasonable to conclude that in practice, open models run locally would be preferred.

Persuasion techniques as tools for offensive information operations

The strongest levers identified at scale - persuasion-focused post-training and information-focused prompting - are practical options for actors seeking to maximise attitude change per interaction. Effective narratives (info payloads) will be packaged as evidence-heavy, policy-flavored argumentation rather than overtly emotional content. Deploying these tactics at scale is furthermore nearly possible now. The only caveat is that unlike the researches in the recent Nature and Science papers, in actual uses, frontier-models provided by big vendors would likely not be used, though the tests against Llama and DeepSeek model justify fully the research outcomes. Case is clear: LLMs are going to reshape both political campaigns and online propaganda operations..

Read together, all this points to influence campaigns optimized at the level of sustained conversational identity. This means that societies may be in big trouble, soon.

What the future holds

The near-term trajectory is less about a single breakthrough model and more about wider access to modular, automated influence stacks. The most efficient safeguards will target conversation-level coordination and the persuasion-accuracy trade-off through auditing and policy limits on persuasion-optimized political systems. According to my knowledge, no state agencies or firms (including digital platforms) opt for such techniques of identification of active influence operations (FIMI). Furthermore, evidence on behavioral outcomes and platform-level amplification is likely to be most informative in lower-salience issue-based cases where the largest shifts may be most expected.

Next to the architecture I presented, let me cite the paper's anchor:

Our results unambiguously demonstrate across three different countries, with different electoral systems, that dialogues with language models can meaningfully change voter attitudes and voting intentions. This observation has implications for the future of political persuasion, political advertising and (more broadly) democracy. ... AI models are persuading potential voters by politely providing relevant facts and evidence, rather than by being skilled manipulators who leverage sophisticated psychological persuasion strategies such as social influence

Sooner or later, political influence using AI will require regulation. If only for fairness in elections. That said, every actor involved in elections or referendums will be using it, soon. In such a scenario, access becomes equal (unless some users would be barred from that). The differentiating factor will thus be how AI is deployed. It may well decide on the electoral outcome - who or what wins.

Ps. Though in the design of the experiments described in Nature, I find that the used tactics were likely not exactly used fairly in e.g. Polish elections. These generation texts were overly based on the spins disseminated in public discourse. While the authors do not account for that, I find it interesting that despite this, they still managed to report highly persuasive effects of AI in those elections.

Pps. I also recently had a seminar at KCL on a related subject.

I am open to new engagements. Feel free to reach out me@lukaszolejnik.com.