It is surprisingly difficult to find realistic, interesting and creative privacy case studies. It is perhaps even more difficult in the case of major software. There are no proper motivations for making this kind of work public (employees often paid to do some kind of work in-house; their compensation typically containing a demotivating NDA). Finding the right angle is also often difficult. I have some experience in this sphere, both in terms of technology (battery case study, Web Bluetooth privacy, London Tube wifi tracking) and from a regulatory point of view (technical overview of GDPR fines, not surprising observation of an imminent outcome of GDPR). Today, I’ll do both at the same time, though more on the second aspect (GDPR).

With growing pace of cloud services development and Internet of Things, ordinary users are increasingly at odds with understanding of how technology works; vendors must be aware of it. The lines between what is local (desktop computer, smartphone), and what is remote (cloud system; someone else’s computer) are getting softer. While about two years ago, I remarked how a web mechanism called ‘Web Bluetooth’ makes the lines between the local devices and the cloud possibly blurred, today we’re seeing another example: local (desktop, smartphone) and cloud. Well designed user interfaces are of particular concern.

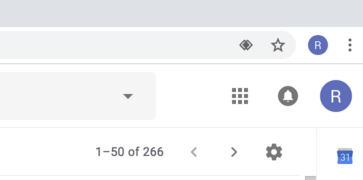

The latest release of a major web browser drops another stone here. Starting with Chrome 69, when you log into a Google service (GMail, or so), Google Chrome is effectively logging you into the browser. It looks like that:

This change apparently “solves” the hypothetical issue of user confusion: “am I logged into the system or into the browser?” (and at the same time is creating others). Logging in is made “consistent” among the web and the browser. While some people are finding it problematic and confusing (e.g. here or here), let’s trust Google Chrome has thought it through well. We can trust Google, as Chrome is the most secure browser out there, and maybe the feature will allow to better tailor ads in the future. There are good reasons for using the browser. Let’s assume the feature is needed. The problem lies elsewhere.

Sudden change

For some reason, the information about this change is not reflected in the official release notes of Chrome 69, nor in the note lauding the user interface upgrade. The change simply appeared to come out of the blue. Things work very different than, say, in Chrome 68 (released 2018-07-24).

Privacy Policy issue

According to Google Chrome privacy policy “signed-in Chrome mode” works differently than the basic (non-signed) mode. Accordingly “your personal browsing data is saved on Google's servers and synced with your account. This type of information can include: Browsing history, Bookmarks, Tabs, Passwords and Autofill information, Other browser settings, like installed extensions”

In line with privacy policy, this could be the case. However, data synchronisation appears not to happen by default in practice (as also confirmed here). It seems this behavior is not accounted by the privacy policy, which means either the policy is obsolete (and needs to be updated, which suggests potential issues in the internal privacy program, and possibly the priorities), or the mechanic may be subject to change in future.

User Interface confusion

There is indeed a justified concern that currently browser sign-ins happen without the user knowledge (in advance), awareness, or consent. This may well be seen as regression in terms of privacy user interface (while I do not intend to argue that this is a proof of privacy being a 2nd-class citizen), not least touching the principle of least surprise.

For a dramatic change in mechanics like that, you could imagine, for example, displaying of an information to the user upon first launch of an updated browser. This has not happened with Chrome 69, which means that a the change of the operating method for a key technology has been made abruptly, for millions of users.

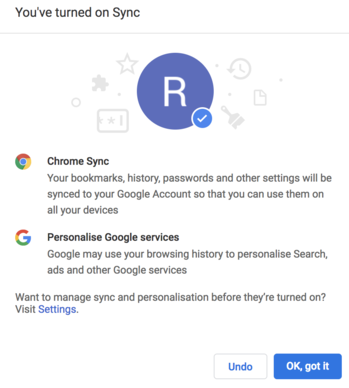

Mistaken synchronisation

Furthermore, during testing I was successful at deliberately-inadvertently synchronising my settings to the cloud, simply by clicking on “sync”, where I could click “undo”, but the information page encouraged me to first look into the settings. However, by clicking on “settings” I was unable to click “undo” - the user interface happily assumed that yes, in fact I wanted to first upload my data. And suddenly, instead of “Last time synced in 2017” I saw the following: “Last time synced on Today”.

Subsequently, I clicked “delete” of course. Note: I tested that on Canary version.

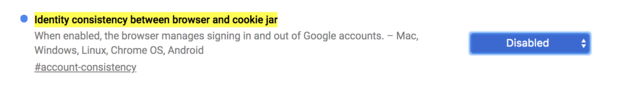

Disable by default

It looks like setting a special flag chrome://flags#account-consistency to “Disabled” might solve the case for concerned users. The flag is quite oddly documented.

This is a fascinating example for a GDPR case study. However, I will not go into details whether GDPR articles concerning consent were affected in this case. Consent is overrated. Instead, I focus on two less known, but specialised items.

Data Protection Impact Assessment

Article 35 of GDPR requires making an assessment taking into account the “fundamental rights and freedoms” (privacy one of them) of an individual. I previously wrote about making of DPIAs (here). Now, I am not sure Chrome has a DPIA at all. Projects made prior to GDPR do not need to have one.

However, data protection impact assessment (DPIA) must be updated when significant changes in the system are introduced. In this case, pre-GDPR projects effectively need to have one made (if not having one). While I do not want to debate whether Chrome releases 67 and 68 introduced such changes, I would say version 69 may have crossed the threshold. If a Data Protection Authority decides there is a need to see the DPIA, it can formally request the vendor to deliver the document. GDPR says that an infringement in relation to a DPIA requirements are in the award region of 10 000 000 EUR, or to 2 % of the total worldwide annual turnover (whichever higher).

Data Protection by Design

This is another interesting superpower introduced by GDPR. Software needs to be designed with consideration of data protection - by design and by default. The particular Article 25 of GDPR is rarely seriously and meaningfully discussed in practice, not least because probably still almost nobody actually knows what the “data protection by design” even means. However, infringement entails a comparable award (20M or 2%) as in the case of DPIA.

The case of Chrome login discussed here might be one of the best examples for a case study. It is high impact (lots of users), concerns expectations and defaults, and is about changing how things work. Clarity of user interface, what the user expects, and what actually happens - are the key elements. I am afraid there may be an issue here. Yet, perhaps Google has designed the new login feature well, considering the user privacy under the hood, but not accounting in the user interface. However, we are unable to know about this of course - it is not explained anywhere (no information in the release log). The possible best place where one can find it could be the data protection impact assessment (previous point).

It would therefore be very interesting if Chrome made its DPIA public. That would not only allow to clarify the above mentioned points. That would also be an interesting and trust-building move. I often advise organisations to make their privacy impact assessment public. I believe doing so might be do good for everyone, in this particular case.

EDIT: The case study developed. I wrote a follow-up here.

Hope you liked this little tech-GDPR exercise. Interested in technical high-level analyses on privacy & cyber, including international norms, regulations, and policy? Feel free to reach out: me@lukaszolejnik.com