Understanding and perceiving privacy as a technological and strategic aspect is becoming a standard practice. The recently published NIST Internal Report “An Introduction to Privacy Engineering and Risk Management” is an interesting attempt to systematize the understanding of privacy engineering. Privacy in this view forms an important technical and strategic factor, building trust and reputation.

NIST recommends that privacy should be implemented directly into system lifecycle - it should be a standard technical and organizational goal.

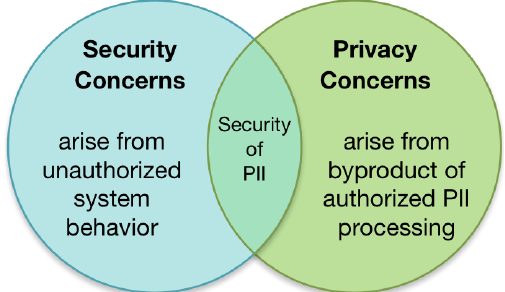

NIST recognizes that security is not the same thing as privacy and that privacy is not security; that’s obvious. Those two are different things. Naturally, there are relationships (even close), but equating them misses a point. It’s possible to identify problems related strictly to security. Similarly, it’s possible to identify problems strictly related to privacy. It must be kept in mind that privacy problems sometimes arise due to data processing. That’s why risk-based methods are often different in the case of privacy.

NIST’s view is schematically shown on the image below

The image tries to catch the distinction between security and privacy in very strict manner, with listing “scope of problems” strictly related to security or privacy, and with a region of overlap. While quite good visually and trying to explain as general concept, I’m not sure if this rather strict division can be maintained in practice. Very often system inter-dependencies and chain reactions can bring changes this meaning.

But that’s the thing security and privacy engineers need to understand: the boundaries, the relationships. It’s not an easy task.

One important thing in the report is that NIST recognizes that privacy issues can arise even due to authorised access to the processed data, and even if the processing is going along the ways it was designed. That’s a good way of reasoning.

NIST lists a number of risks resulting from processing of private data, which are:

- loss of trust

- loss of self determination

- discrimination

- economic loss

I’m not expanding on those points - but these are some example consequences following a possible privacy incident.

Privacy Engineering

NIST acknowledges that there is no strict and clear definition of privacy engineering as for now and adopts the following: privacy engineering means a specialty discipline of systems engineering focused on achieving freedom from conditions that can create problems for individuals with unacceptable consequences that arise from the system as it processes PII.

That’s a pretty good attempt for a concise definition. The only aspect I would change would be replacing “PII” with a more general “private data”. While the document focuses strictly on Personally Identifiable Information (PII) as the main issue in understanding privacy, the reader should take it with a grain of salt. It’s increasingly difficult to say which information are personal (PII) and which aren’t. The concept of PII becomes relatively fuzzy and fluid. I always warn against such simplifications.

PII aren’t just a simple list of identifiers which are “sensitive”. You can’t say that “this particular item is PII, but that isn’t”. That said, I write this from a European perspective (NIST is a US body).

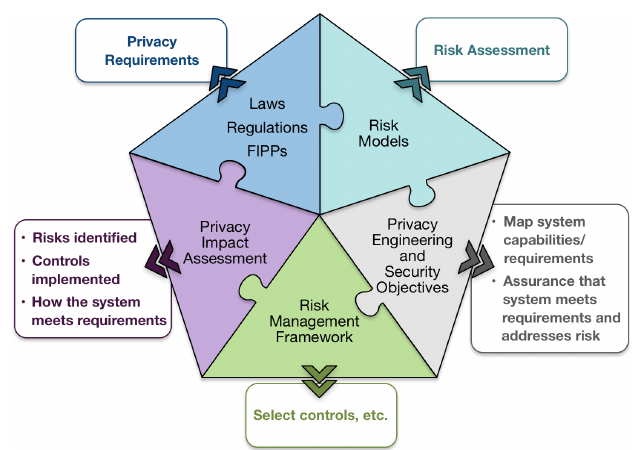

One important thing that NIST suggests it to treat privacy as more than just merely a compliance issue. It’s important to think in efficient manner. Privacy is a problem of strategy, management and technology, and the following image taken from the report highlights those points:

Privacy process requires good design, reference frameworks, technical controls (privacy engineering phase), and measurement tools. The measurement tool is Privacy Impact Assessment. System owners should check questions such as the following:

- What do we want to achieve?

- How do we want to do this?

- Do we really want to do this?

- How do we design a good system?

- Is the system functioning as expected?

- Measure the system using a Privacy Impact Assessment tool set

Security in systems traditionally is represented with a Confidentiality, Integrity, Availability triad. NIST attempts to define privacy engineering objectives and controls with similar triad:

- Predictability

- Manageability

- Disassociability

Predictability

Predictability is meant to build trust and provide accountability and requires having a good understanding of data handling in the system (how is the data processed?). The reason is to eliminate the surprises on later phases of system use. It’s about knowing how the data is really used. In simple terms, it’s about avoiding the following questions: “were/are we REALLY collecting THAT?”. Predictability is an important aspect of achieving transparency.

Additionally, objectives of predictability can be met with technical solutions such as de-identification, anonymization, etc. In general, predictability should ensure that both system owners and users understand what is really going on.

The documents “framing predictability in terms of reliable assumptions” should be understood as including privacy requirements in the system, which later allows measuring the efficiency of particular system components - and system as a whole.

Manageability

Manageability concerns individual phases of data processing (such as collection, storage, change, etc), additionally - it relates to accountability. Manageability ensures that it’s possible to control data on all layers system layers - and that this control is made in accountable manner; that the data is accurate and - if needed - can be updated. Manageability is also the layer of control offered to the user (privacy preferences). It helps answering the following example question: the user has opted-out from parts of data processing, are we actually respecting it? A system exhibiting manageability has strong data integrity and quality traits.

Disassociability

Disassociability is about identity, identifiers and identifier linkage, a design and protection challenge. It’s a very interesting aspect of privacy engineering, and if done well, could even offer real privacy-preserving functionalities.

It’s also the privacy engineering layer where cryptography can be applied to obfuscate data. Competent privacy engineers must be up-to-date with the state of the art - research, technology, trends, as many cryptographic techniques are on the research phase. Disassociability is also the layer where techniques such as anonymity, de-identification, unlinkability, unobservability, pseudonymity are of importance.

NIST recognizes that the analysis framework for privacy systems will be based on risk-management. As with the impossibility of building perfectly secure systems, there is also no option of building systems with perfect privacy, as NIST notes. In this case, “impossible” should be understood as “infeasible” - in practice, in terms of resources. But it’s often a challenge to establish an impact of a privacy risk (which is somewhat often still a research topic, as NIST notes). As privacy problems relate to individuals, impact should be assessed along the lines, too. Consequently, privacy risk modelling must take into consideration the impact on individual. So far, the hidden burden of data breach costs has been delegated on the users. On a high level, one of the aims of privacy engineering could be changing this.

There are also, of course, different reference frames helping to to measure the impact, such as: costs of non-compliance, users not wanting to use a particular system (the system is useless), loss of reputation. Again, NIST acknowledges that risk-management is what the name says: management. Applying risk-management approach is not completely eradicating problems, it prioritizes the important ones. Privacy engineering is still largely emerging field. Organisations must decide on the level of technical abilities and expertise that their teams should have - both on the design, deployment and Privacy Impact Assessment phases.

Summary

NIST is an influential body. With its recognition of privacy engineering process, the field gains an important traction. NIST’s model is technically sound, although the document is constructed on a rather general level and still leaves room for interpretation. I believe more documents will follow.

The message is clear: privacy is a technical and strategic matter. It must be treated in this way.