Is Privacy Sandbox’s Federated Learning of Cohorts leaking information about web browsing history? Let's find out.

Federated Learning of Cohorts is computing a SimHash on a user's web browsing history (the lists of visited websites) to obtain the cohort ID. In principle, it is a lossy computation: when only having the ID, “reversing” this ID to obtain the list of user-visited websites should in principle be impossible. Otherwise, merely by knowing how to associate the ID with the browsing history, it would be possible to learn the user's web browsing history very easily.

This is an "in principle" study, based on what we know and the current implementation. I reckon that some of the things here may change. Privacy Sandbox/FloC is currently in the early testing phase.

Privacy threat model and an example attack scenario

What should the privacy threat model include? Speaking of the privacy threat model, there’s one inconvenient detail. Realistically speaking let’s imagine an attacker with access to a small-to-modest computing platform. The attacker can simply precompute lists of user-visited websites to obtain a lookup table that would associate a particular ID with a particular bunch of websites. From now on, whenever the attacker would encounter a specific user ID, “reversing” is as simple as looking up the table to obtain the input list of websites. In other words, it could look as follows:

- (list of visited websites), (cohort/floc ID)

- google.com youtube.com bbc.co.uk, 124680817842188

- www.thecutecats.com, edps.europa.eu theguardian.com, 74021315925146

- cnil.fr reddit.com slashdot.org, 129175096709835

- lukaszolejnik.com twitter.com thecatsite.com, 250770441332148

[many more, for various combinations of some popular websites, just a demonstration]

In the above case, when visiting lukaszolejnik.com with an ID of 250770441332148, we could reason that the visitor previously also visited those two websites: twitter.com thecatsite.com. This is a problem because it would mean that web browsing history may potentially be leaking, and according to my studies (confirmed by now), web browsing history is private personal data (one of the topics I researched heavily). We may imagine that a small-to-modest computing platform can precompute LOTS OF such associations. From then on, this platform could serve as a specialised deanonymisation service lookup.

Practical demonstration

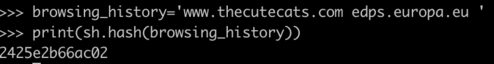

Let’s see that in practice. Seeing it is as simple as browsing the web on a clean web browser and inspecting the ID. Or computing this offline using an implementation of SimHash with the current size of the SimHash output is set to 50 bits (to understand more about SimHash: see e.g. these: paper1, paper2; also, the actually useful bits are less than 50). For testing purposes, we may also use this Python simhash implementation:

from floc_simhash import SimHash

sh = SimHash(n_bits=50)

browsing_history='www.thecutecats.com edps.europa.eu’

print(int(sh.hash(browsing_history),16))

635922175929346

In the above case, the website of the European Data Protection Supervisor (edps.europa.eu) upon seeing the ID 635922175929346 could well reason that a particular visitor also previously visited another website, www.thecutecats.com. Thus, learning user’s web browsing habits.

Defense

Defending from such attacks is not necessarily simple. It’s important to study, assess and guarantee that:

- Floc IDs are never created when the user did not visit enough websites (it’s too simple to “reverse” based on a few sites): for small numbers of visited sites, floc ID should not be shown. What is “small” here is a good question, and must be the topic of a dedicated study. This limit currently appears to be defined defined as... 3. Rather too small.

- In the computation of FloC, perhaps do not include certain websites, like sensitive ones. Good question: who decides what is sensitive in a transparent manner?

- The study of collisions of floc IDs returns satisfactory results. IDs should not be generally “unique”. These are both theoretical and practical tests. How do collisions happen?

- Perhaps do not process raw website addresses but preprocess the input data? That said, preprocessing based on website categories could still leak some contextual information.

I guess that we should expect a detailed privacy design document at some point. Surely the users would never expect that merely visiting a website leaks all the information about their previously-visited websites? With such contextual information, they could be tracked or invasively micro-targeted. The final deployment of this system would need to consider such privacy risks.

Summary

My previous research indicates that web browsing histories are sensitive (e.g. convey information about the demographic description of the user, psychological portrait, etc), and can be fairly stable. This would mean that the IDs could well be sensitive. Privacy protection guarantees must be studied to demonstrate the privacy margins.

Did you like the assessment and analysis? Any questions, comments, complaints, or offers of engagement ? Feel free to reach out: me@lukaszolejnik.com

I described a privacy attack that is using precomputed SimHashes to discover user’s web browsing histories. This is a valid privacy threat. In the previous iteration, I looked at a possible leak about web browsing in incognito mode. Google’s Privacy Sandbox is certainly an interesting development from the point of view of privacy research, strategy, engineering, and PR. It will be fascinating to see how it develops. Looking forward to it. Let’s hope that the creators are invested in developing the proposal on all fronts.